How to make a simple CHAT-BOT using TensorFlow

No doubt, a chatbot is a very good solution for many problems like QnA, FAQ, Basic Conversation, and having a chatbot in every solution also helps the business answer the customer in an innovative way compared to writing emails.

Nowadays we have many chatbot solutions available, all you have to do is drag and drop as per your message and response, and done you have a chatbot ready with a single click.

But in this Blog we shall see how can we create our own chatbot using Tensorflow and also understand how this simple conversation chatbot works internally.

Full Code available on Google Collab -> Link

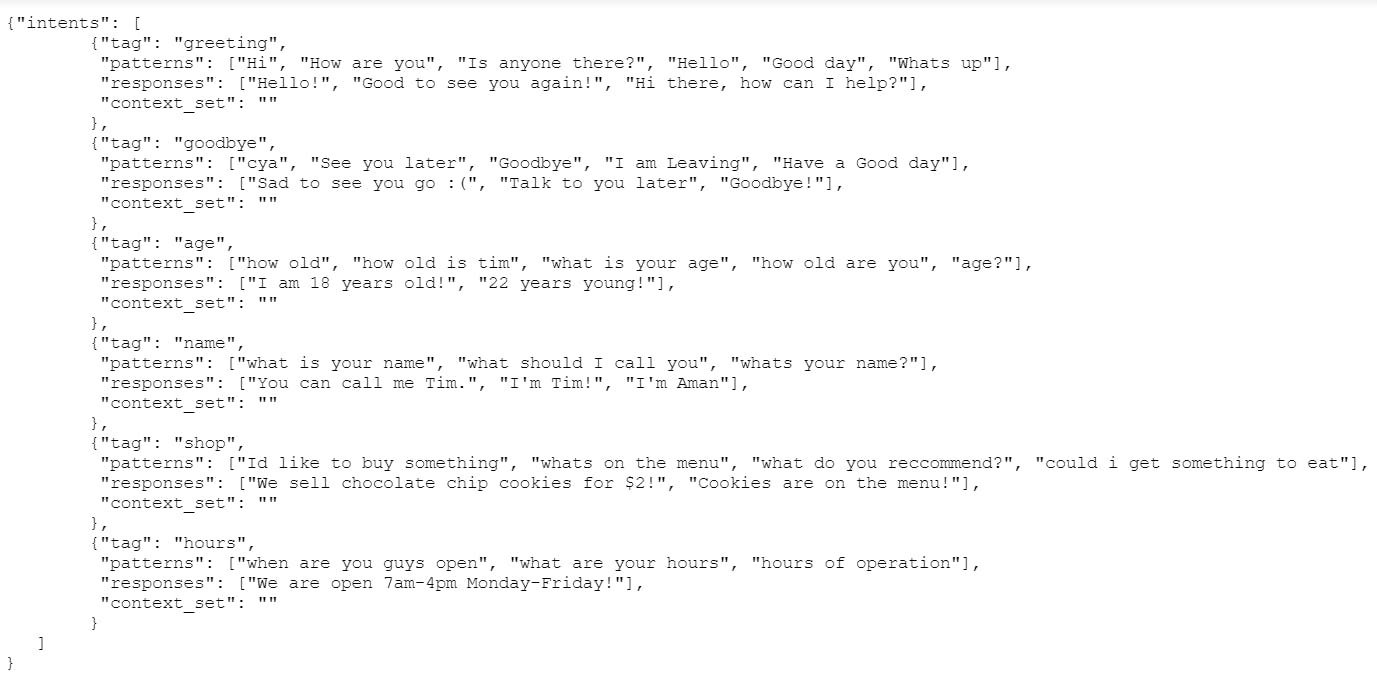

First let us understand how we prepare the dataset, for chat-bot we need to prepare the dataset in form of question, answer and for each question intent. So we build a classification model which accepts a question and then it classifies the question in its respective intent and based on intent we can go back to dataset and randomly pick answer.

Now, Let us start by importing important libraries required

#importing the libraries

import tensorflow as tf

import numpy as np

import pandas as pd

import json

import nltk

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.layers import Input, Embedding, LSTM , Dense,GlobalMaxPooling1D,Flatten

from tensorflow.keras.models import Model

Now we need to read the intent file and then create a dataset with question and tag respectively

#importing the dataset

with open('intents.json') as content:

data1 = json.load(content)

#getting all the data to lists

tags = []

inputs = []

responses={}

for intent in data1['intents']:

responses[intent['tag']]=intent['responses']

for lines in intent['patterns']:

inputs.append(lines)

tags.append(intent['tag'])

#converting to dataframe

data = pd.DataFrame({"inputs":inputs,

"tags":tags})

print(data)

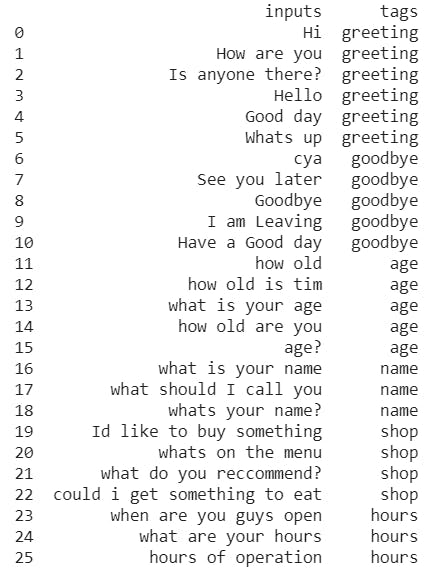

Once we prepare the dataset we can view it

Next, we apply text preprocessing i.e. we ll clean the data first (removing special character etc..) and then we tokenize the text and convert the clean text into sequence and then apply padding (we add padding so that all the text are of same length)

#removing punctuations

import string

data['inputs'] = data['inputs'].apply(lambda wrd:[ltrs.lower() for ltrs in wrd if ltrs not in string.punctuation])

data['inputs'] = data['inputs'].apply(lambda wrd: ''.join(wrd))

#tokenize the data

from tensorflow.keras.preprocessing.text import Tokenizer

tokenizer = Tokenizer()

tokenizer.fit_on_texts(data['inputs'])

train = tokenizer.texts_to_sequences(data['inputs'])

#apply padding

from tensorflow.keras.preprocessing.sequence import pad_sequences

x_train = pad_sequences(train)

#encoding the outputs

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

y_train = le.fit_transform(data['tags'])

Now we need to define the input shape and the vocabulary size before creating the model and training the model

#input length

input_shape = x_train.shape[1]

print(input_shape)

#define vocabulary

vocabulary = len(tokenizer.word_index)

print("number of unique words : ",vocabulary)

#output length

output_length = le.classes_.shape[0]

print("output length: ",output_length)

Now we create a Neural Network Model using LSTM and Embedding layer

#creating the model

i = Input(shape=(input_shape,))

x = Embedding(vocabulary+1,10)(i)

x = LSTM(10,return_sequences=True)(x)

x = Flatten()(x)

x = Dense(output_length,activation="softmax")(x)

model = Model(i,x)

#compiling the model

model.compile(loss="sparse_categorical_crossentropy",optimizer='adam',metrics=['accuracy'])

#training the model

train = model.fit(x_train,y_train,epochs=200)

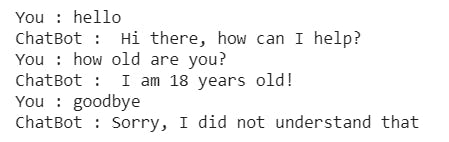

Now after training the data we can test on the model

#chatting

import random

while True:

texts_p = []

prediction_input = input('You : ')

#removing punctuation and converting to lowercase

prediction_input = [letters.lower() for letters in prediction_input if letters not in string.punctuation]

prediction_input = ''.join(prediction_input)

texts_p.append(prediction_input)

#tokenizing and padding

prediction_input = tokenizer.texts_to_sequences(texts_p)

prediction_input = np.array(prediction_input).reshape(-1)

prediction_input = pad_sequences([prediction_input],input_shape)

#getting output from model

output = model.predict(prediction_input)

check = output

output = output.argmax()

#finding the right tag and predicting

response_tag = le.inverse_transform([output])[0]

if max(check[0]) < 0.5:

print("ChatBot : Sorry, I did not understand that")

else:

print("ChatBot : ",random.choice(responses[response_tag]))

if response_tag == "goodbye":

break

The above code can be executed in Collab or in Jupyter Notebook , but before that please install the library like tensorflow